Futures Worth Building, Futures Worth Fighting For

On March 15th, 2025, I was honored to be invited to speak at Funding the Commons: Infrastructures of Resilience, a conference hosted at the awe-inspiring Internet Archive in San Francisco, a testament to the essential gift that open libraries are to our world. The event took place amidst a purge of public information and public officials by the Trump Administration. The conference brought together leaders stewarding open technologies and public goods who are working to build infrastructures to support shared flourishing. See all the talks.

I did something a little out of my comfort zone for this event, as I felt that the moment called for something different. While I don’t typically write things out when I’m speaking, one added benefit is that I can share them here! What follows is Futures Worth Building, my remarks at Funding the Commons: Infrastructures of Resilience.

In this room, there are builders. There are climate hackers. There are librarians and archivists. Wisdom keepers and philanthropists. There are institution navigators and extitution bridgers. In this room, there are dreamers.

And in this room, there’s a dream.

A dream for better things. Shared futures worth building. Public commons worth tending. A vision of the way that things can be, should be. An understanding that the future belongs to the many, not just the few.

And all around us, there are people who can feel it. People who know that this isn’t the way things should be. And they know that many of the systems that exist aren’t working (for them). They feel lost. Afraid. Even angry at what they know—in their bones—is not right. And they’re grasping for our common dream.

But they’re being sold a false promise. They’re being told that there is no other way. That it’s too late. That there isn’t enough. That they can’t trust other people. They can’t trust themselves.

The false promise says “Only I can protect you.”

And in following that false promise, people erect walls, burn libraries, and drown in misinformation and rising waters. People shut doors instead of opening them. Deplete resources instead of cultivating them. And in doing so, lay waste to the things that help us thrive.

It doesn’t have to be like this.

My name is B Cavello. I am the director of emerging technologies at a nonprofit organization called the Aspen Institute, a nonprofit based in Washington, DC. I've been privileged to serve in many roles across many sectors, from small nonprofits and garage startups to huge multinationals, even the US Federal Government. I’ve spent most of the last ten years working in AI.

And I am a dreamer, too.

One of my dreams is called public AI.

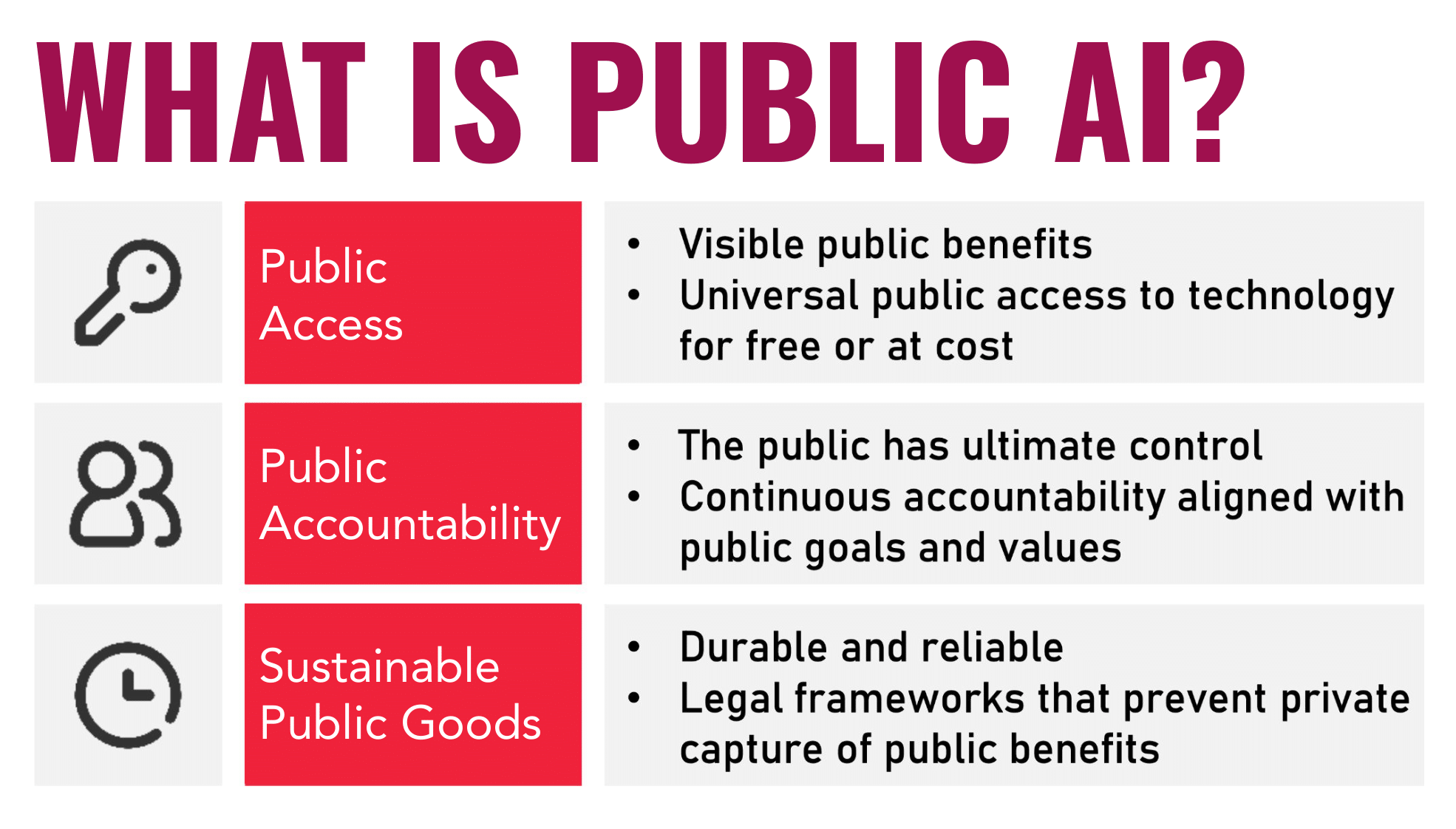

Some of you fellow dreamers know about public AI. But for those who don’t, here’s a primer: Public AI is not a model or a compute network or an AI system. It is an approach. It is a relationship with AI that emphasizes public access, accountability, and sustainable public goods.

Public AI is not government AI (but it can be enabled by governments OR non-governmental organizations or even for-profit companies)! Public AI is rooted in the belief that AI is too important, too powerful a tool to be wielded by only the few. Public AI is rooted in the belief that the tools to create the future should rest in the hands of the public. It comes from a recognition that societies don’t have to just consume the technologies shaping their lives—they can and should create them.

Public AI is a practice. It’s a way of doing things. As I like to say, inspired by the preamble of the US Constitution, just as we can form a more perfect Union, we can build a more public AI.

This means expanding access. Recognizing that certain capabilities are so important for participation in public life that access to them should be universal. That’s why Public Access is a key value of public AI—providing everyone with direct, affordable access to these tools so that everyone can realize their potential.

“More public” AI means increasing meaningful public participation and governance. While few technologists would actually oppose the idea of advancing the common good, few AI developers proactively seek public guidance to steer the development process or change course when the public disapproves of their decisions.

This is why Public Accountability is a key value of public AI—giving the public the power to truly shape the development of technology, setting priorities for research and product, including the option to not pursue certain capabilities if they would be harmful.

Finally—and I know this is all-too-familiar for the folks in this room (Funding the Commons)—often developers who do want to do good with technology are unable to secure the resources needed to create lasting public value. (How many brilliant social impact tech projects have faded into obscurity after the hackathon ends and their repos gather dust?)

Meanwhile, the terms of private capital and investment too often lock well-intentioned creators into problematic incentives structures leading to instability, distracting from innovating on the BIG challenges that face our world.

To truly realize the opportunities of AI we need sustainable development models that enable us to chart a different course. That’s why Sustainable Public Goods are a key value of public AI—creating robust and stable foundations that everyone can innovate upon.

In building “more public” AI, we can shift people’s relationship to these technologies. Today, many of the public’s thoughts about AI are dominated by concern, uncertainty, and fear. By centering the public—with access, accountability, and sustainable public goods—we can shift the conversations toward capabilities, priorities, and potential.

I’m proud to be working with a community of dreamers to make this a reality. There are people, in this room, guaranteeing ACCESS to critical information resources for current and future generations. There is a coalition of public computing labs working to innovate on new models for funding and SUSTAINING public goods. In my own work, I’m collaborating with brilliant minds at the Collective Intelligence Project, Simon Fraser University, and Metagov along with my incredible colleagues at the Aspen Institute to develop new mechanisms for public ACCOUNTABILITY.

Too often, “accountability” is used synonymously with “punishment.” Deterrence is a powerful mechanism, but there is more that we can do than simply reducing harm.

I believe that public accountability is also about being truly responsive to the needs and desires of the public. A “more public” AI ecosystem must not only avoid potential risks, but also clearly articulate priorities, opportunities, and challenges that AI must address in order to be a true public good.

In this room of fellow dreamers, I would like to challenge you: We must more clearly articulate what direction we want to be headed in.

And then: we need to be able to measure it!

Without meaningful measures of progress, AI development may continue to pursue goals that are orthogonal or even antagonistic to the public interest. While there are many claims being made about the potential good AI might enable, we lack answers to basic questions.

Are we delivering on the promises of medical and climate progress we so often hear about? Are the tools being developed today helping us solve the tough problems that we face? Are they enabling increased standards of living? Are they achieving the sustainable development goals? (Like are the AI capabilities being developed today actually going to help with gender equality? With food security? With decent work?)

If we want to realize the dreams of flourishing that these technologies can inspire, then we need to get concrete about prioritizing progress on the things that matter. Public accountability demands that we be transparent about how things are going so we can live up to the aspirations of the public and make good on the potential of these powerful tools.

Now, I want to be clear: public AI, as an approach, doesn’t necessarily stop other people from pursuing AI in a harmful way. We do still need to do that.

But I sometimes think of public AI playing the role of smuggled outside media in North Korea or the book drops across the Iron Curtain (perhaps some of the work that Mark Graham and the Wayback team are doing): it proves that another way is possible.

The good news is that we have tools at our disposal for reducing bad behavior like sensible regulations and public decision-making processes. But as has happened throughout history countless times, if those tools prove to be insufficient, the public will find other ways to shut things down, if necessary.

But they need to know that there are alternatives. That there are futures worth building, futures worth fighting for.

Today, there are people all around us who are hungry for better futures. People who know that this isn’t the way things should be. And they know that many of the systems that exist are not working (for them).

They feel lost. Afraid. Even angry. And they’re being sold a false promise.

But we know that that false promise won’t last. The cracks are already showing.

We know that those people are builders, are dreamers, are climate hackers, librarians, archivists, wisdom keepers, philanthropists, institution navigators, extitution bridgers—the public is so many things!

We know this because we are the public, too.

And through the cracks in these failing systems, all around us, the light of our shared dream is shining through.

Thank you.